There are two definitions that can be understood from network latency. In relation to overall network performance, latency is the number of milliseconds for your web content to begin rendering in a visitor’s browser.

In relation to network computing, latency is the time taken for a site visitor to make an initial connection with your webserver.

So, by minimizing latency, you will be able to correspondingly reduce page loading time and enhance your site visitor’s experience. Therefore, minimizing latency is highly recommended to any e-commerce sites. If you are a web developer this article will fit you.

How to Measure Latency

There are several methods that you can use to measure latency, such as:

Round-trip time: with Ping, you can measure a round trip time, Ping is a command line tool that bounces a request off a user’s system to any targeted server. RTT is determined by the interval it takes for the packets to be returned to the user.

Network congestion or throttling can occasionally provide a false reading, while the ping value provides a reliable assessment of latency.

Time to first byte (TTFB): After the webserver gets an initial user request, the time taken for the visitor’s browser to begin rendering a requested page is known as time to first byte (TTFB). There are two ways to measure it:

- Actual TTFB: The amount of time taken for the first data byte from your server to reach a visitor’s browser. Network speed and connectivity affect this value.

- Perceived TTFB: The amount of time taken for a site visitor to perceive your web content as being rendered in their browser. The time it takes for an HTML file to be parsed impacts this metric, which is critical to both SEO and the UX.

How CDNs Reduce Your Network Latency

To reduce network latency, you can apply CDNs which work in several ways, such as:

- Content caching: you can get this benefit through a CDN’s global network of strategically placed points of presence (PoPs); exact copies of your web pages are cached and compressed. As your site visitors are generally served content from the PoP closest to their location, this will greatly decrease RTT and latency.

- Connection optimization: it is a session reuse and network peering that optimize connections between visitors and origin servers.

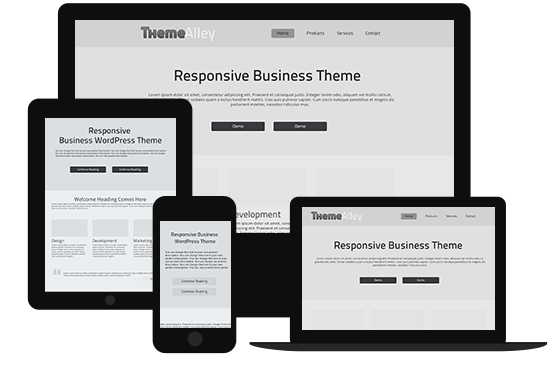

- Progressive image rendering: For any image, a progressive series is overlaid over one another in the visitor’s browser. Each overplay is of a higher quality resolution. The visitor’s perception is that the page is being rendered more quickly in their browser than it would be otherwise.

Reducing the network latency is very important in maintaining your website in its best quality, as it determines your website’s performance and how it can attract more visitors. With these tricks, you can make an awesome website without having to worry too much about slow page loading time problems.